Step 1 : Create hive directory

sudo mkdir hive

cd hive

>pwd

/usr/local/hive/

Step 2 : Download Hive tar (Supported version )

We will download hive 3.X as we are using Hadoop 3.XX version

Sudo wget http://apachemirror.wuchna.com/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

Resolving apachemirror.wuchna.com (apachemirror.wuchna.com)... 159.65.154.237

Connecting to apachemirror.wuchna.com (apachemirror.wuchna.com)|159.65.154.237|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 278813748 (266M) [application/x-gzip]

Saving to: ‘apache-hive-3.1.2-bin.tar.gz’

apache-hive-3.1.2-bin.tar.gz 100%

[=======================================================================>] 265.90M 3.66MB/s in 84s

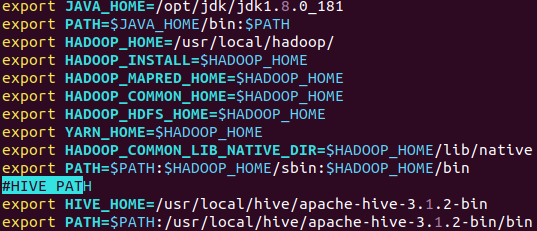

Step 3 : Edit the “.bashrc” file to update environment variables for user

#HIVE PATH (Path of hive extracted directory)

vi ~/.bashrc

export HIVE_HOME=/usr/local/hive/apache-hive-3.1.2-bin

export PATH=$PATH:/usr/local/hive/apache-hive-3.1.2-bin/bin

#Run below command to make the changes work in same terminal.

source ~/.bashrc

#In bashrc file you will also find HADOOP environmental variables already set as shown in the below image.

Step 4: Check hive version.

hduser@bd:/usr/local/hive$ hive --version

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive 3.1.2

Git git://HW13934/Users/gates/tmp/hive-branch-3.1/hive -r 8190d2be7b7165effa62bd21b7d60ef81fb0e4af

Compiled by gates on Thu Aug 22 15:01:18 PDT 2019

From source with checksum 0492c08f784b188c349f6afb1d8d9847

Step 5: Create Hive directories within Hadoop File System. The directory ‘warehouse’ is the location to store the table or data related to hive.

Command:

hduser@bd:/usr/local/hive$ hdfs dfs -mkdir -p /user/hive/warehouse

hduser@bd:/usr/local/hive$ hdfs dfs -mkdir /tmp

#CHECK OLD PERMISSION

hduser@bd:/usr/local/hive$ hdfs dfs -ls /user/hive/

Found 1 items

drwxr-xr-x - hduser supergroup 0 2020-07-04 01:07 /user/hive/warehouse

#GIVE WRITE PERMISSION TO NEW DIRECTORY

hduser@bd:/usr/local/hive$ hdfs dfs -chmod g+w /user/hive/warehouse

hduser@bd:/usr/local/hive$ hdfs dfs -chmod g+w /tmp

#CHECK NEW PERMISSION

hduser@bd:/usr/local/hive$ hdfs dfs -ls /user/hive/

Found 1 items

drwxrwxr-x - hduser supergroup 0 2020-07-04 01:07 /user/hive/warehouse

Step 7: Set Hadoop path in hive-env.sh

In this file we will set HADOOP_HOME path and Hive conf(hive-env.sh) file path

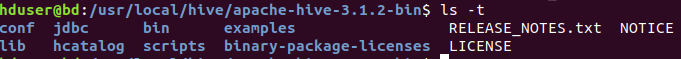

hduser@bd:/usr/local/hive$ ls

apache-hive-3.1.2-bin

hduser@bd:/usr/local/hive$ cd apache-hive-3.1.2-bin/

hduser@bd:/usr/local/hive/apache-hive-3.1.2-bin$ ls

bin binary-package-licenses conf examples hcatalog jdbc lib LICENSE NOTICE RELEASE_NOTES.txt scripts

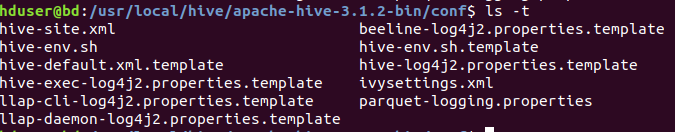

#Create hive-env.sh file from hive-env.sh.template file in conf directoey

hduser@bd:/usr/local/hive/apache-hive-2.1.0-bin$ sudo cp conf/hive-env.sh.template conf/hive-env.sh

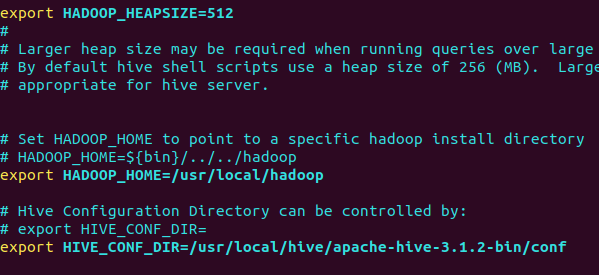

Edit hive-env.sh and set configurations given below

#export HADOOP_HEAPSIZE=512

#export HADOOP_HOME=/usr/local/hadoop

#export HIVE_CONF_DIR=/usr/local/hive/apache-hive-3.1.2-bin/conf

hduser@bd:/usr/local/hive$ vi apache-hive-3.1.2-bin/conf/hive-env.sh

Step 8: Edit hive-site.xml

This file having hive configurations like mapper,reducers,hive metadata database connnection,warehouse directory,execution engine etc details. HIVE by Default uses derby database but we will setup with PostgreSQL Database to store metadata(schema,tables) Information.

#create hive-site.xml from given proto-hive-site.xml file in conf directory

hduser@bd:sudo cp /usr/local/hive/apache-hive-2.1.0-bin/hcatalog/etc/hcatalog/proto-hive-site.xml ../apache-hive-2.1.0-bin/conf/hive-site.xml

#Configure for warehouse directory database connection (POSTGRESQL)

Install PostgreSQL and Create Hive Metastore Database to store metadata

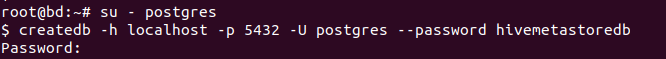

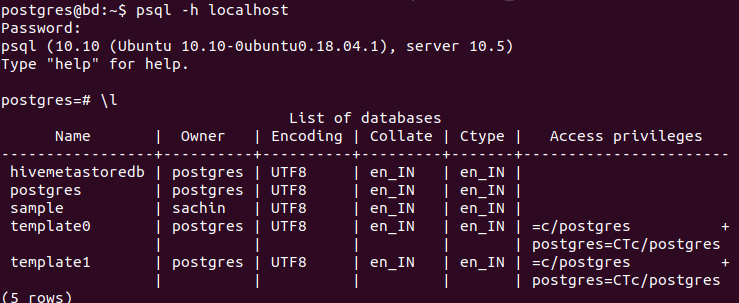

$ su - postgres

$ createdb -h localhost -p 5432 -U postgres --password hivemetastoredb

Once Database created login and verify it

hive-site.xml (Edit for database,warehouse and hive engine parameters

hduser@bd:/usr/local/hive$ cat apache-hive-3.1.2-bin/conf/hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.postgresql.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:postgresql://localhost:5432/hivemetastoredb</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>postgres</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>postgres</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>true</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>mr</value>

</property>

<property>

<name>hive.metastore.port</name>

<value>9083</value>

</property>

<property>

<name>mapreduce.input.fileinputformat.input.dir.recursive</name>

<value>true</value>

</property>

</configuration>

Step 9: Create Hive schema (PostgreSQL)

Schematool utility given in hive downloaded directory under bin location. This will auto create required schema, tables, functions etc in the hivemetastoredb database.

hduser@bd:/usr/local/hive/apache-hive-3.1.2-bin/bin/schematool -initSchema -dbType postgres

OR

hduser@bd:~$schematool -initSchema -dbType postgres

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:postgresql://localhost:5432/hivemetastoredb

Metastore Connection Driver : org.postgresql.Driver

Metastore connection User: postgres

Starting metastore schema initialization to 3.1.0

Initialization script hive-schema-3.1.0.postgres.sql

Initialization script completed

schemaTool completed

We finished with installation and configuration, we can start hive.

Step 10: Launch Hive

We can start hive by many ways direct cli without starting hiveserver2 and hivemetastore.

a) simple hive shell command

hduser@bd:~$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = eb7e5d02-a668-4922-8e2a-d29d6c7204fa

Logging initialized using configuration in jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive Session ID = a675cb5a-ccb8-4c6d-b6c3-64098f06b49a

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 0.783 seconds, Fetched: 1 row(s)

b) Start hiveserver2 & hive metastore ( This allows you to use hive from other application behaves as gateway also provide GUI to track queries)

START HIVE SERVER

#CREATE Directory

mkdir ~/hiveserver2log

cd ~/hiveserver2log

#Execute "hiveserver2"

hduser@bd:~/hiveserver2log$ nohup hiveserver2 &

#Execute "hive" to run hiveserver2 service

hduser@bd:~/hiveserver2log$ nohup hive --service hiveserver2 &

#Start HiveServer using custom parameters

hduser@bd:~/hiveserver2log$ nohup hive --service hiveserver2 --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.root.logger=INFO,console &

#Check "nohup.out" file

hduser@bd:~/hiveserver2log$ head nohup.out

2020-07-05 02:13:33: Starting HiveServer2

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 61860a5f-4636-4a11-a8f9-7a7561a17ba1

Hive Session ID = 29c4896c-cec0-4771-b6c4-996b1b2d9726

Hive Session ID = 4652c5d6-be07-483d-a903-8f505061af9a

Hive Session ID = 56fb1770-5543-4b5a-997b-40c33451e9b4

HIVE SERVER STATUS & LOG FILES

hduser@bd:~/hiveserver2log$ jps -ml | grep HiveServer2

18643 org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-service-3.1.2.jar org.apache.hive.service.server.HiveServer2 --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.root.logger=INFO,console

#Pass above process pid in ps hduser@bd:~/hiveserver2log$ ps -fp 18643 | less UID PID PPID C STIME TTY TIME CMD

hduser 18643 2662 0 12:22 pts/0 00:04:30 /opt/jdk/jdk1.8.0_181/bin/java -Dproc_jar -Djava.library.path=/usr/local/hadoop/lib/native

-Dproc_hiveserver2 -Dlog4j.configurationFile=hive-log4j2.properties -Djava.util.logging.config.file=/usr/local/hive/apache-hive-3.1.2-bin/conf/parquet-logging.properties -Djline.terminal=jline.UnsupportedTerminal -Dyarn.log.dir=/usr/local/hadoop/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/usr/local/hadoop -Dyarn.root.logger=INFO,console -Xmx512m -Dhadoop.log.dir=/usr/local/hadoop/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/local/hadoop -Dhadoop.id.str=hduser -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-service-3.1.2.jar org.apache.hive.service.server.HiveServer2 --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.root.logger=INFO,console

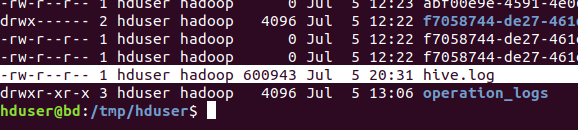

Hive Log Files

- Hive log files can be found in “/tmp/hive/hive.log”

- In my case I have not created hive user ie its “/tmp/hduser/hive.log”

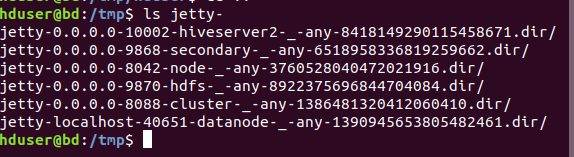

- Hive jetty web app in “/tmp/jetty*”

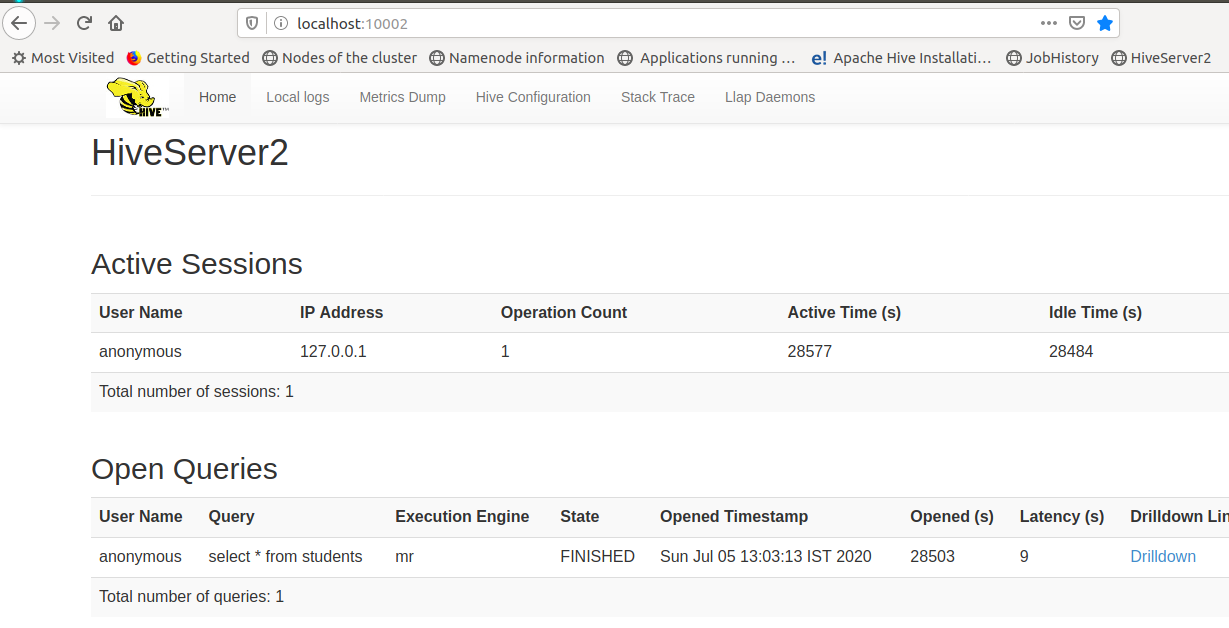

Hive User Interface and Port

HiveServer2: 10000 (hive.server2.thrift.port)

HiveServer2 Web UI: 10002 (hive.server2.webui.port)

Metastore: 9083

URL:localhost:10002

Hive Metastore

CREATE HIVEMETASRORELOG DIRECTORY

hduser@bd:mkdir ~/hivemetastorelog

hduser@bd:cd ~/hivemetastorelog

#Start Hive MetaStore

hduser@bd:nohup hive --service metastore &

#Check "nohup.out" file

hduser@bd:tail -f ~/hiveserver2log/nohup.out

Starting Hive Metastore Server

Hive MetaStore: status, log files

hduser@bd:~$ jps -ml 29170 org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-beeline-3.1.2.jar org.apache.hive.beeline.BeeLine 30067 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode 30531 org.apache.hadoop.yarn.server.nodemanager.NodeManager 29523 org.apache.hadoop.hdfs.server.namenode.NameNode 18643 org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-service-3.1.2.jar org.apache.hive.service.server.HiveServer2 --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.root.logger=INFO,console 30326 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager 22807 org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-beeline-3.1.2.jar org.apache.hive.beeline.BeeLine 29721 org.apache.hadoop.hdfs.server.datanode.DataNode 31034 org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-metastore-3.1.2.jar org.apache.hadoop.hive.metastore.HiveMetaStore 25950 sun.tools.jps.Jps -ml

#Pass process id

hduser@bd:~$ ps -fp 31034 | less

UID PID PPID C STIME TTY TIME CMD

hduser 31034 2662 0 12:28 pts/0 00:01:00 /opt/jdk/jdk1.8.0_181/bin/java -Dproc_jar -Djava.library.path=/usr/local/hadoop/lib/native

-Dproc_metastore -Dlog4j.configurationFile=hive-log4j2.properties -Djava.util.logging.config.file=/usr/local/hive/apache-hive-3.1.2-bin/conf/parquet-logging.properties -Dyarn.log.dir=/usr/local/hadoop/logs -Dyarn.log.file=hadoop.log -Dyarn.home.dir=/usr/local/hadoop -Dyarn.root.logger=INFO,console -Xmx512m -Dhadoop.log.dir=/usr/local/hadoop/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/local/hadoop -Dhadoop.id.str=hduser -Dhadoop.root.logger=INFO,console -Dhadoop.policy.file=hadoop-policy.xml -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /usr/local/hive/apache-hive-3.1.2-bin/lib/hive-metastore-3.1.2.jar org.apache.hadoop.hive.metastore.HiveMetaStore

c) Beeline – Command Line Shell

Launch Beeline:

hduser@bd:~$ beeline Beeline version 3.1.2 by Apache Hive beeline>

CONNECT TO HIVE USING BEELINE

beeline> !connect jdbc:hive2://localhost:10000

Connecting to jdbc:hive2://localhost:10000

Enter username for jdbc:hive2://localhost:10000: hive

Enter password for jdbc:hive2://localhost:10000: ****

ERROR : you might get the following below error using hive or other user, In my case I haven’t created hive user so error will come

beeline> !connect jdbc:hive2://localhost:10000 Connecting to jdbc:hive2://localhost:10000 Enter username for jdbc:hive2://localhost:10000: hive Enter password for jdbc:hive2://localhost:10000: **** 20/07/06 00:01:30 [main]: WARN jdbc.HiveConnection: Failed to connect to localhost:10000 Error: Could not open client transport with JDBC Uri: jdbc:hive2://localhost:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: hduser is not allowed to impersonate hive (state=08S01,code=0) beeline>

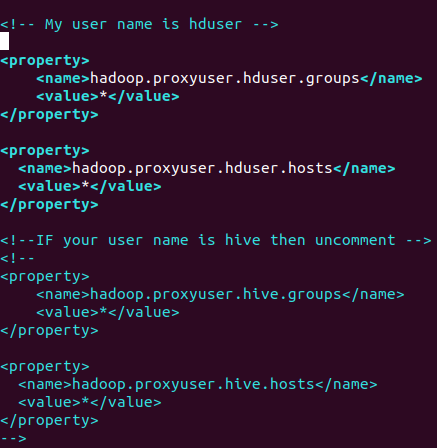

Fix this error, you have to edit the file ${HADOOP_HOME}/etc/hadoop/core-site.xml and add the following. In my case user name is hduser so change the configuration and make value as *

hadoop.proxyuser.<your_username>.groups

hadoop.proxyuser.<your_username>.hosts

<!-- My user name is hduser -->

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<!--IF your user name is hive then uncomment -->

<!--

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>*</value>

</property>

-->

RESTART Hadoop Cluster to reload changes

Beeline Try Again with New Configuration

hduser@bd:/usr/local/hadoop/sbin$ beeline SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hive/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Beeline version 3.1.2 by Apache Hive beeline> !connect jdbc:hive2://localhost:10000 Connecting to jdbc:hive2://localhost:10000 Enter username for jdbc:hive2://localhost:10000: Enter password for jdbc:hive2://localhost:10000: Connected to: Apache Hive (version 3.1.2) Driver: Hive JDBC (version 3.1.2) Transaction isolation: TRANSACTION_REPEATABLE_READ

#SHOW DATABASES 0: jdbc:hive2://localhost:10000> show databases; INFO : Compiling command(queryId=hduser_20200706002128_6969a66e-c058-4907-a63f-1274f3fb61fc): show databases INFO : Concurrency mode is disabled, not creating a lock manager INFO : Semantic Analysis Completed (retrial = false) INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hduser_20200706002128_6969a66e-c058-4907-a63f-1274f3fb61fc); Time taken: 0.007 seconds INFO : Concurrency mode is disabled, not creating a lock manager INFO : Executing command(queryId=hduser_20200706002128_6969a66e-c058-4907-a63f-1274f3fb61fc): show databases INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hduser_20200706002128_6969a66e-c058-4907-a63f-1274f3fb61fc); Time taken: 0.019 seconds INFO : OK INFO : Concurrency mode is disabled, not creating a lock manager +----------------+ | database_name | +----------------+ | default | | sqlnosql | +----------------+ 2 rows selected (0.217 seconds)

#USE SPECIFIC DATABASE 0: jdbc:hive2://localhost:10000> use sqlnosql; INFO : Compiling command(queryId=hduser_20200706002135_7a7b1f05-ca60-4efc-b719-f9bb95980aac): use sqlnosql INFO : Concurrency mode is disabled, not creating a lock manager INFO : Semantic Analysis Completed (retrial = false) INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null) INFO : Completed compiling command(queryId=hduser_20200706002135_7a7b1f05-ca60-4efc-b719-f9bb95980aac); Time taken: 0.012 seconds INFO : Concurrency mode is disabled, not creating a lock manager INFO : Executing command(queryId=hduser_20200706002135_7a7b1f05-ca60-4efc-b719-f9bb95980aac): use sqlnosql INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hduser_20200706002135_7a7b1f05-ca60-4efc-b719-f9bb95980aac); Time taken: 0.028 seconds INFO : OK INFO : Concurrency mode is disabled, not creating a lock manager No rows affected (0.074 seconds)

#LIST TABLES 0: jdbc:hive2://localhost:10000> show tables; INFO : Compiling command(queryId=hduser_20200706002140_a365d5e3-1a36-48af-8033-c000230ce531): show tables INFO : Concurrency mode is disabled, not creating a lock manager INFO : Semantic Analysis Completed (retrial = false) INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hduser_20200706002140_a365d5e3-1a36-48af-8033-c000230ce531); Time taken: 0.017 seconds INFO : Concurrency mode is disabled, not creating a lock manager INFO : Executing command(queryId=hduser_20200706002140_a365d5e3-1a36-48af-8033-c000230ce531): show tables INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hduser_20200706002140_a365d5e3-1a36-48af-8033-c000230ce531); Time taken: 0.14 seconds INFO : OK INFO : Concurrency mode is disabled, not creating a lock manager +-----------+ | tab_name | +-----------+ | students | +-----------+ 1 row selected (0.206 seconds) #DESCRIBE TABLE SCHEMA 0: jdbc:hive2://localhost:10000> describe students; INFO : Compiling command(queryId=hduser_20200706002152_0f7e7bc7-038a-45d1-8b38-a042b8f4458c): describe students INFO : Concurrency mode is disabled, not creating a lock manager INFO : Semantic Analysis Completed (retrial = false) INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col_name, type:string, comment:from deserializer), FieldSchema(name:data_type, type:string, comment:from deserializer), FieldSchema(name:comment, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hduser_20200706002152_0f7e7bc7-038a-45d1-8b38-a042b8f4458c); Time taken: 0.114 seconds INFO : Concurrency mode is disabled, not creating a lock manager INFO : Executing command(queryId=hduser_20200706002152_0f7e7bc7-038a-45d1-8b38-a042b8f4458c): describe students INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hduser_20200706002152_0f7e7bc7-038a-45d1-8b38-a042b8f4458c); Time taken: 0.033 seconds INFO : OK INFO : Concurrency mode is disabled, not creating a lock manager +-----------+------------+----------+ | col_name | data_type | comment | +-----------+------------+----------+ | id | int | | | name | string | | +-----------+------------+----------+ 2 rows selected (0.178 seconds) 0: jdbc:hive2://localhost:10000>